Subscribe to discover Om’s fresh perspectives on the present and future.

Om Malik is a San Francisco based writer, photographer and investor. Read More

Apple has released a new lineup of Macs, including MacBook Pros and the 24-inch iMac, all powered by M3 chips with a new GPU architecture, once again outpacing rival chipmakers. With these new products, Apple is finally embracing AI, in the way it knows best — through hardware and chips. Apple has introduced a new space black finish. And one more thing – the 13-inch MacBook Pro is officially discontinued.

For nearly a year now, I have been playing around with various AI tools on my M1 Mac Studio and an older M1 MacBook Pro. And every time, I have been impressed by how easily these machines took everything in their stride and didn’t even break a sweat. (Translation: no fans came on, the machines never ran out of resources, and occasionally the laptop would get warm, but not toasty.) I’ve heard similar stories from developer friends pushing their laptops even harder with advanced AI tasks, compared to my more amateur attempts.

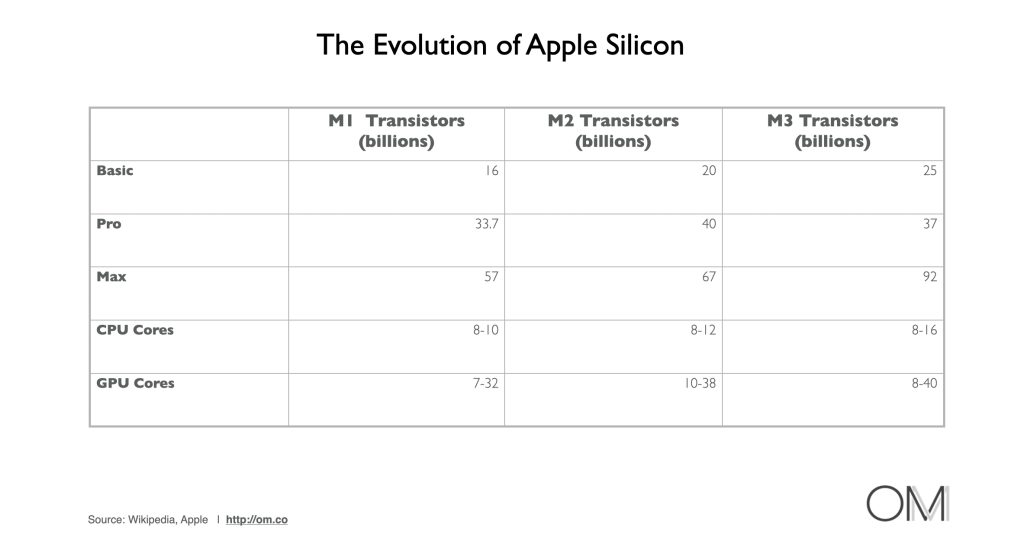

At the Vision Pro launch event, I ran into Apple’s former SVP of Marketing, Phil Schiller, and questioned why Apple wasn’t highlighting the synergy between Apple Silicon Macs and AI. Today, I got the answer — Apple was waiting for the launch of its new M3 chip to flex its AI muscles. Apple’s M3 chip lineup includes the M3, M3 Pro, and M3 Max, blending a new GPU architecture with faster CPUs and larger Unified Memory — up to 128 GB. The M3 Max chip, in particular, is focused on 3D artists, video editors, researchers, and machine learning programmers.

All flavors of M3 chips will be available on the 14 and 16-inch MacBook Pro laptops — though not on the 13-inch MacBook Pro, which has finally been put to rest. It’s about time if you ask me.

The MacBook Air is a much better — cheaper, lighter, lower-end option. But for me, the 14-inch MacBook Pro is the ‘Goldilocks’ Apple laptop — depending on the budget, it marries oomph, Liquid Retina XDR display quality, audio-visual features, and weight to be at ease at home or on the go.

When I had a review machine from Apple, it went with me everywhere. Now, I’m planning to trade in my old M1 machine for an M3-version MacBook Pro, as soon as it’s available next month.

***

The M3 Family

M3

M3 has a 10-core GPU that is 65 percent faster than M1 for graphics performance. M3 has an 8-core CPU, with four performance cores and four efficiency cores, that is up to 35 percent faster than M1 for CPU performance. And upports up to 24GB of unified memory.

M3 Pro

M3 Pro has an 18-core GPU that is up to 40 percent faster than M1 Pro. Support for unified memory goes up to 36GB. The 12-core CPU design has six performance cores and six efficiency cores and is up to 30 percent faster than M1 Pro.M3 Max

M3 Max has a 40-core GPU is up to 50 percent faster than M1 Max, and support for up to 128GB of unified memory. The 16-core CPU features 12 performance cores and four efficiency cores that’s up to 80 percent faster than M1 Max.

The new M3 chips are coming at an opportune time — Apple’s rivals, Qualcomm, Nvidia, AMD, and Intel, have been making noises about catching up with Apple. When Apple launched the M1 chip, I pointed out:

I don’t think AMD and Intel are Apple’s competitors. We should be looking at Qualcomm as the next significant laptop chip supplier. Apple’s M1 is going to spark an interest in new architectures from its rivals. This approach to integration into a single chip, maximum throughput, rapid access to memory, optimal computing performance based on the task, and adaptation to machine learning algorithms is the future — not only for mobile chips but also for desktop and laptop computers. And this is a big shift, both for Apple and for the PC industry.

Steve Jobs’ Last Gambit

Qualcomm recently announced the Snapdragon X, a PC chip that it says is better than the M2 processor. Nvidia, too, is working on its own chip, as is AMD. All three companies are using Arm’s technology. Intel, on the other hand, is moving forward with its own technologies. Notable as these developments are, they all lack Apple’s big advantage: its vertically integrated platform that allows the company to squeeze more performance and efficiency out of its chips. Its ability to understand the usage behavior of its hardware platform makes Apple’s advantages even more impactful.

“I believe the Apple model is unique and the best model,” Johny Sourji, Apple’s chip czar said. “We’re developing a custom silicon that is perfectly fit for the product and how the software will use it. When we design our chips, which are like three or four years ahead of time, Craig and I are sitting in the same room defining what we want to deliver, and then we work hand in hand. You cannot do this as an Intel or AMD or anyone else.”

***

The star of the new M3 lineup is the GPU — something the company makes quite obvious in its press release and talking points. The new GPU chips feature “Dynamic Caching,” which, unlike traditional GPUs, allocates the use of local memory in hardware in real-time.

With Dynamic Caching, only the exact amount of memory needed is used for each task. This is an industry first, transparent to developers, and the cornerstone of the new GPU architecture. It dramatically increases the average utilization of the GPU, which significantly increases performance for the most demanding pro apps and games.

This is good news for game developers and those who rely on graphics-intensive apps. I am sure some of us, especially Photoshop and photo-related AI users, will benefit from the new GPU technology. Apple says that, along with other hardware changes, it can now deliver up to 2.5 times the speed of the M1 family of chips.

Additionally, the new GPU brings hardware-accelerated mesh shading to the Mac, delivering greater capability and efficiency to geometry processing, and enabling more visually complex scenes in games and graphics-intensive apps. In fact, the M3 GPU is able to deliver the same performance as M1 using nearly half the power, and up to 65 percent more performance at its peak.

For AI developers and apps like Topaz that utilize machine learning models, Apple is touting the enhanced capabilities of its neural engine. “The Neural Engine is up to 60 percent faster than in the M1 family of chips, making AI/ML workflows even faster,” Apple claims. It would be great to see how Topaz, Adobe, and others can leverage this improvement for tasks such as noise reduction and other visual data enhancements.

***

Dynamic caching is a key part of modern GPU architecture. As workloads evolve, GPUs are evolving too. There was a time when the primary role of GPUs was to drive the video display, especially for graphically intensive tasks such as video games. Now, they are viewed as a crucial component of AI and ML infrastructure — both at the edge and within the core of cloud computing architecture. GPU makers are all focusing on intelligent, workload-specific caching strategies, which is seen as a key area of innovation.

Companies such as Graphcore and Cerebras are using unique caching for their neural network-focused GPUs. Most major GPU manufacturers, including the current industry giant NVIDIA (as well as AMD and Intel), have a version of dynamic caching, though each has its own implementation.

How caching is implemented varies based on the intended use — whether it be for gaming, professional graphics, or data center applications. NVIDIA, for example, employs various forms of cache, including L1/L2 caches and shared memory, which are dynamically managed to optimize performance and efficiency. AMD uses large L3 caches (“Infinity Cache“) to boost bandwidth and reduce latency — an approach beneficial for gaming. Intel’s Xe graphics architecture focuses on smart caching, balancing power efficiency and performance.

Apple’s approach to dynamic caching involves being highly adaptive in allocating memory and resources based on the application(s) to deliver the best performance. Apple’s integrated approach to chip design, combined with energy efficiency and a new take on “dynamic caching” in the GPU, means that end-users can achieve higher GPU utilization. For instance, if you are an AI developer working with large transformer models that require billions of parameters, you can put the entire model in memory and access it all at once.

Interestingly — and Apple hasn’t really highlighted this — the same dynamic caching technology is also available in Apple’s A17 processors.

***

This isn’t the first time I’ve said this, but since the launch of the iPhone and the subsequent smartphone revolution, the concept of computers has undergone a transformation. Computers are shape-shifting, constantly adapting to us, the users.

Time and again, I have made the case that AI is really here to augment our capabilities. I said as much in an article for The New Yorker in 2016, and again last year in The Spectator, where I wrote:

We should think of AI as augmented intelligence, not artificial intelligence, and open our eyes to the positive impacts this technology is having. We live in a complex, data-saturated, increasingly digital world. Our institutions and information flow at the speed of the network — and we need help to make sense of it all. As a species, we are forced to move and react faster. If anything, the demands on our time have multiplied. How do you make sense of it all? You must use technology to help read and digest. When I think of the potential of AI, I think of the software systems that look through millions of scans, that can identify and flag signs of breast cancer. It helps doctors be more vigilant and better armed in order to save lives. Our data-saturated world needs such tools to identify patterns, trends, and correlations.

We have finally put the “personal” in personal computers. Everything is adapting to this reality — with artificial intelligence being an integral part of that transformation.

Chipmakers recognize this — Qualcomm has touted the ability of its Snapdragon X Elite to run generative AI models with over 13 billion parameters on-device, and Apple follows suit. That’s why they’re increasingly emphasizing their new chips and AI capabilities.

Ian Bratt, a technical fellow at chipmaker Arm, recently highlighted that AI workloads are rapidly evolving. With the rise of Large Language Models, AI workloads are becoming more complex and bandwidth-limited. This marks a significant shift from the compute-limited tasks seen in convolutional neural networks. Essentially, the traditional way of manufacturing computer chips is becoming outdated, necessitating a new, more flexible approach to chip design.

AI algorithms function with extreme parallelism. While adding more compute power (and GPUs) can address this, the real challenge lies in how quickly data can be moved, how promptly and extensively memory can be accessed, and the amount of energy required to operate these algorithms efficiently. Apple’s strategy with its Silicon has been remarkably prescient, taking into account these realities even in their latest GPU updates.

Apple has a substantial opportunity to integrate generative AI into its core platform, mainly because of its chip and hardware-level integration. For example, by actively incorporating open-source generative AI models into their SDK and developer tools, Apple can leverage the evolving nature of the interaction between humans and machines in the digital world. The new M3-based computers thus provide developers with a compelling reason to remain within, or even return to, the Apple ecosystem.

Microsoft CEO Satya Nadella, in a video interview accompanying the launch of Qualcomm’s new chip, stated that in the not-so-distant future, computer interfaces will employ its CoPilot AI, relegating the start button to the garbage bin of history. Instead, we would simply be speaking to our computers, instructing them on what to do.

Apple has the chance to embrace this future — it already has everything it needs, starting with its hardware.

October 30, 2023. San Francisco

Comments are closed.

Hey Om Malik,

My name is Cedric Williams and I am a NYC-based entrepreneur and a tech enthusiast who has been actively implementing AI into my daily life.

I read your article and I think that you definitely have a clear understanding of the AI competitive landscape and the relationship between AI and the latest advances in GPU technology.

I really like your “augmented intelligence” bit as well, I share the same perspective. I believe that AI augments, or improves, your innate intelligence and that you get out of AI what you put in.

Thank you for sharing your knowledge and expertise. Take care.